8 hours of instruction

Explore how to use Transformer-based natural language processing models for text classification tasks, such as categorizing documents. You will also explore how to leverage Transformer-based models for named-entity recognition (NER) tasks and analyze various model features, constraints, and characteristics to determine which model is best suited for a particular use case―based on metrics, domain specificity, and available resources.

OBJECTIVES

- Understand how text embeddings have rapidly evolved in NLP tasks such as Word2Vec, recurrent neural network (RNN)- based embeddings, and Transformers

- See how Transformer architecture features, especially self-attention, are used to create language models without RNNs

- Use self-supervision to improve the Transformer architecture in BERT, Megatron, and other variants for superior NLP results

- Leverage pre-trained, modern NLP models to solve multiple tasks such as text classification, NER, and question answering

- Manage inference challenges and deploy refined models for live applications

PREREQUISITES

None

SYLLABUS & TOPICS COVERED

- Introduction

- Meet the instructor and create an account

- Introduction To Transformers

- Build the Transformer architecture in PyTorch and calculate the self-attention matrix

- Translate English to German with a pre-trained Transformer model

- Self Supervision BERT And Beyond

- Build a text classification project to classify abstracts

- Build a named-entity recognition (NER) project to identify disease names in text

- Improve project accuracy with domain-specific models

- Inference And Deployment For NLP

- Prepare the model for deployment

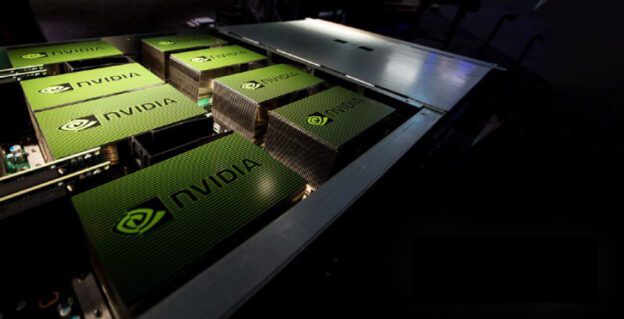

- Optimize the model with NVIDIA TensorRT

- Deploy the model and test it

- Final Review

- Review key learnings and answer questions

- Complete the assessment, earn a certificate and complete the workshop survey

- Learn how to set up your own AI application development environment

SOFTWARE REQUIREMENTS

Each participant will be provided with dedicated access to a fully configured, GPU-accelerated workstation in the cloud.