8 hours of instruction

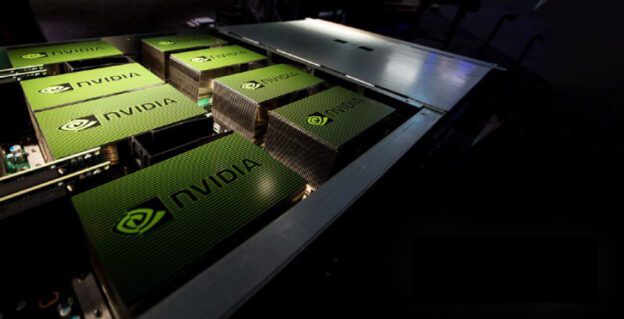

Find out how to use multiple GPUs to train neural networks and effectively parallelize\ntraining of deep neural networks using TensorFlow.

OBJECTIVES

- Stochastic Gradient Descent, a crucial tool in parallelized training

- Batch size and its effect on training time and accuracy

- Transforming a single-GPU implementation to a Horovod multi-GPU implementation

- Techniques for maintaining high accuracy when training across multiple GPUs

PREREQUISITES

None

SYLLABUS & TOPICS COVERED

- Introduction

- Meet the instructor

- Create an account

- Stochastic Gradient Descent And The Effects Of Batch Size

- Understand the issues with sequential single-thread data processing and the theory behind speeding up applications with parallel processing

- Explore loss function, gradient descent, and stochastic gradient descent (SGD)

- Learn the effect of batch size on accuracy and training time

- Training On Multiple GPUs With Horovod

- Discover the benefits of training on multiple GPUs with Horovod

- Learn to transform single-GPU training on the Fashion MNIST dataset to Horovod multi-GPU

- implementation

- Maintaining Model Accuracy When Scaling To Multiple GPUs

- Understand why accuracy can decrease when parallelizing training on multiple GPUs

- Explore tools for maintaining accuracy when scaling training to multiple GPUs

- Final Review

- Review key learnings and answer questions.

- Complete the assessment and earn a certificate.

- Complete the workshop survey.

- Learn how to set up your own AI application development environment.

SOFTWARE REQUIREMENTS

Each participant will be provided with dedicated access to a fully configured, GPU-accelerated workstation in the cloud.

About Instructor

Login

Accessing this course requires a login. Please enter your credentials below!